Generative Adversarial Registration for Improved Conditional Deformable Templates

Neel Dey1 Mengwei Ren1 Adrian Dalca2, 3 Guido Gerig1

1New York University 2CSAIL, MIT 3Harvard Medical School

ICCV 2021

Abstract

Deformable templates are essential to large-scale medical image registration, segmentation, and population analysis. Current conventional and deep network-based methods for template construction use only regularized registration objectives and often yield templates with blurry and/or anatomically implausible appearance, confounding downstream biomedical interpretation. We reformulate deformable registration and conditional template estimation as an adversarial game wherein we encourage realism in the moved templates with a generative adversarial registration framework conditioned on flexible image covariates. The resulting templates exhibit significant gain in specificity to attributes such as age and disease, better fit underlying group-wise spatiotemporal trends, and achieve improved sharpness and centrality. These improvements enable more accurate population modeling with diverse covariates for standardized downstream analyses and easier anatomical delineation for structures of interest.

Video Overview

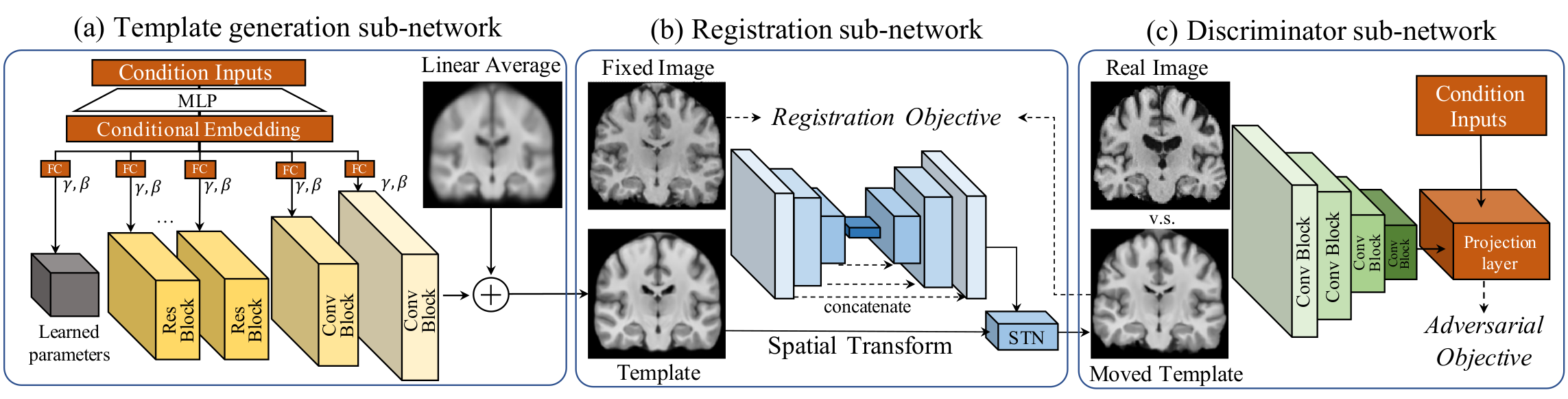

Framework

A template generation network (a) processes an array of learned parameters with a convolutional decoder whose feature-wise affine parameters are learned from input conditions to generate a conditional template. A registration network (b) warps the generated template to a randomly sampled fixed image. A discriminator (c) is trained to distinguish between moved synthesized templates and randomly-sampled images such that realism and condition-specificity is encouraged.

Template Visualizations and Comparisons with VoxelMorph-Templates

Age and disease-conditioned template estimation on the Predict-HD dataset.

(Left) Age-conditioned template estimation on the dHCP dataset. (Right) Interpolating between disease conditions from control-subjects (CS) to the Huntington's disease (HD) cohort for the Predict-HD dataset.

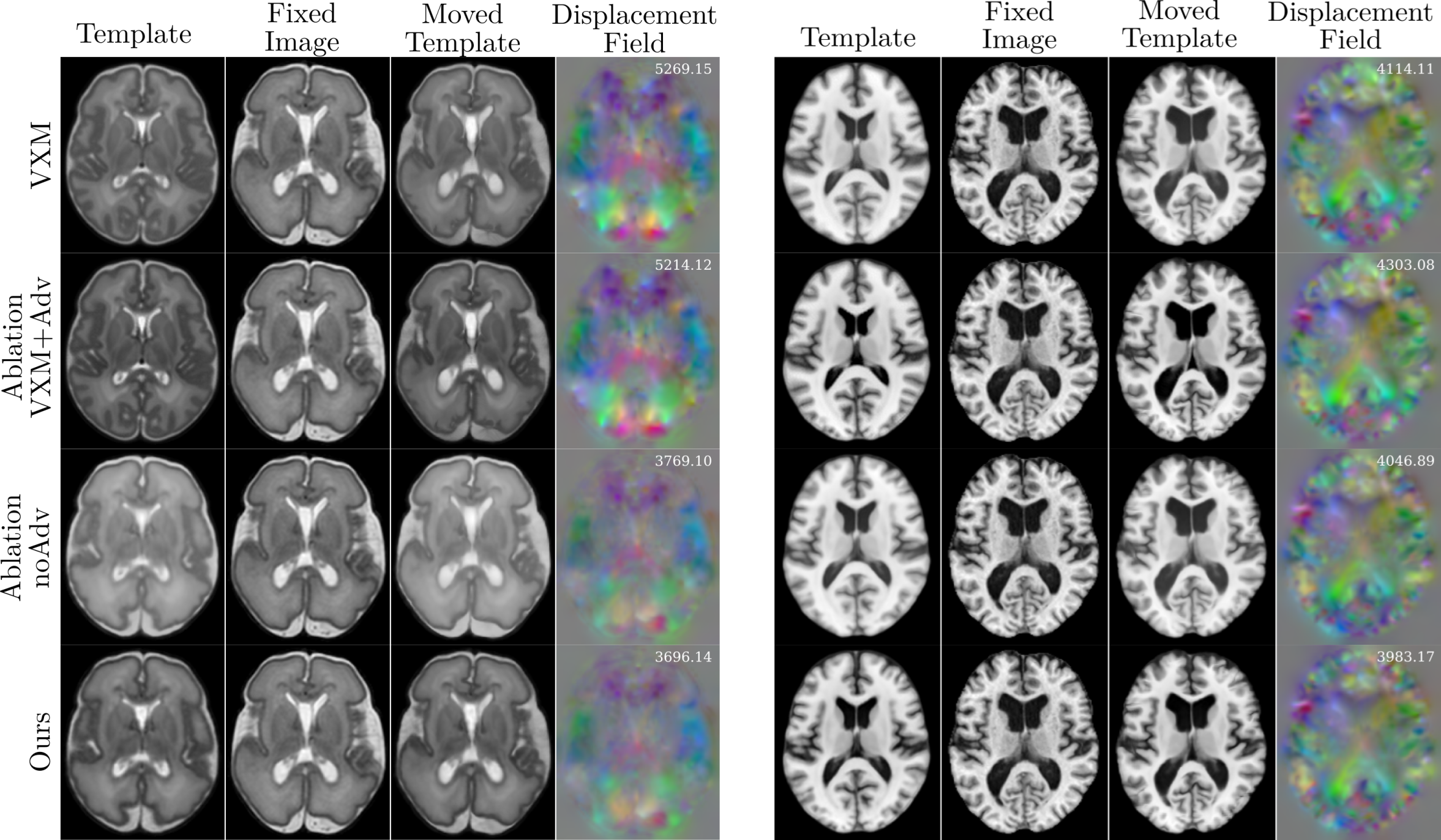

Sample Registrations and Ablations

Sample registration results comparing VoxelMorph-Templates, 2 ablations of our method, and our method for dHCP (left) and Predict-HD (right).

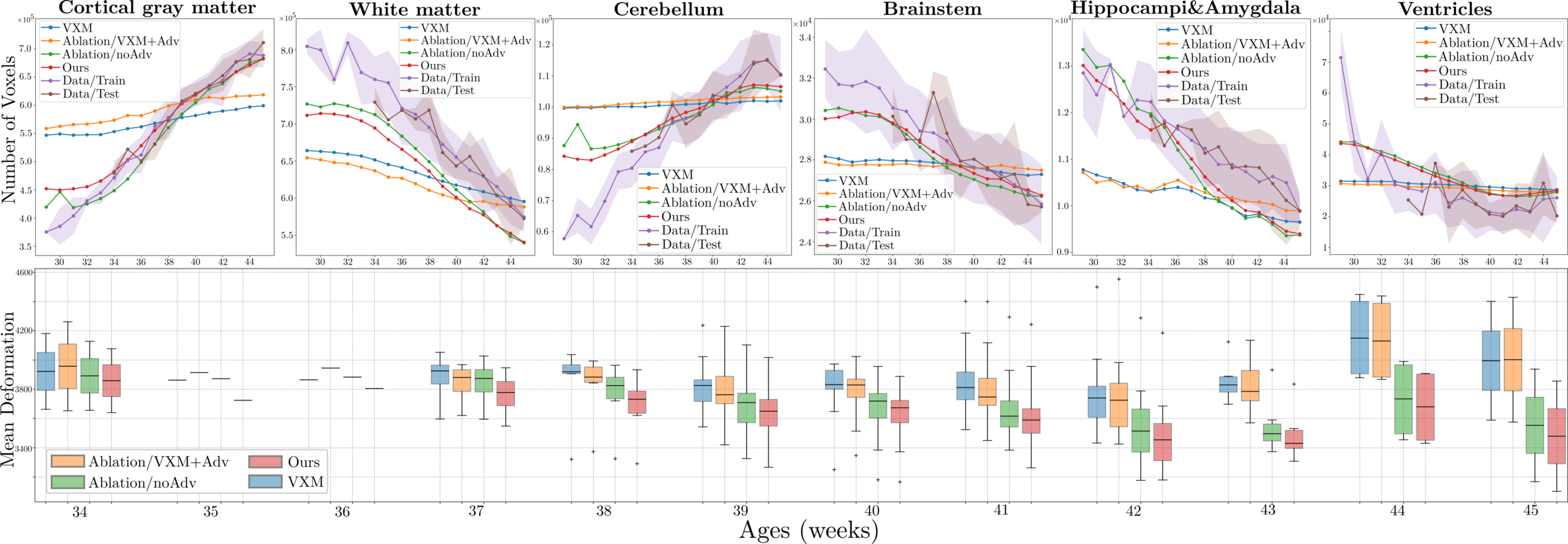

Top Volumetric trends of dHCP template segmentations for all methods overlaid upon the volumetric trends for the underlying train (purple) and test (brown) sets. Bottom Mean deformation norms to held-out test data (lower is better) for all conditional methods.

BibTeX

@misc{dey2021generative,

title={Generative Adversarial Registration for Improved Conditional Deformable Templates},

author={Neel Dey and Mengwei Ren and Adrian V. Dalca and Guido Gerig},

year={2021},

eprint={2105.04349},

archivePrefix={arXiv},

primaryClass={cs.CV}

}